Blog

Introducing Elm Bootstrap

01 March 2017

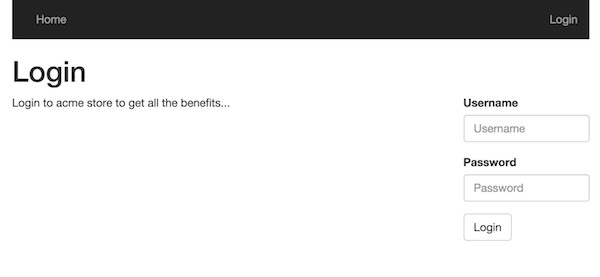

TweetToday I’m happy that I can finally announce version 1.0.0 of Elm Bootstrap. When I set out to develop Elm Bootstrap, my goal was to make an Elm package that makes it easy to build responsive and reliable web applications in Elm using Twitter Bootstrap. This version is the first step towards that goal.

What is it then ?

Elm Bootstrap is a fairly comprehensive library package that wraps the upcoming Twitter Bootstrap 4 CSS framework. It provides a range of modules and functions to make it pleasant and reasonably typesafe to create a Bootstrap styled web application in Elm without giving up too much on flexibility. Most of Twitter Bootstrap is opt-in and the same applies to Elm Bootstrap. That means that you can pick and choose which parts you wish to use for your application.

| You will find modules in Elm Bootstrap that corresponds to most of what Twitter Bootstrap refers to as components. There are no such thing as components in Elm, there are only functions, and functions can be grouped into modules When I speak about modules you know I’m talking about Elm and when you see components mentioned you know it’s about Twitter Bootstrap. |

-

Layout related

-

Grid - Provides functions to easily create flexbox based responsive grid (rows, columns) layouts.

-

Text - Helper functions for working with text alignment

-

-

Forms

-

Form, Input, Select, Checkbox, Radio, Textarea and Fieldset - These modules provides functions to create nice Bootstrap styled forms with a lot of flexibility

-

-

Interactive elements

-

Tab, Accordion, Modal, Dropdown and Navbar are modules that provide functions to work with interative elements. In Twitter Bootstrap, the corresponding components are backed by JavaScript in Elm Bootstrap it’s all Elm of course.

-

-

Misc

-

Alert, Badge, Button, Card, Listgroup and Progress provide you functions to create elements that correspond to their Twitter Bootstrap counterpart.

-

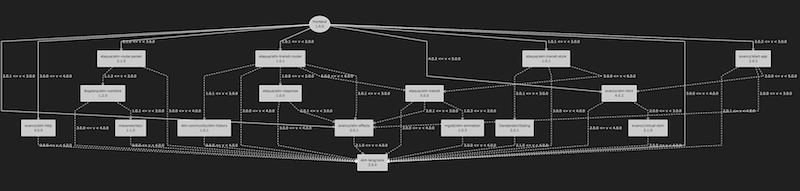

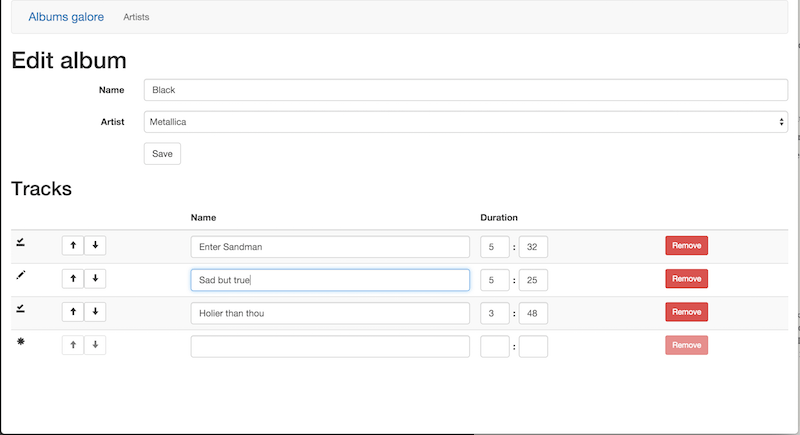

Example of using the Elm Bootstrap Tab module to create an interactive tab control.

Documentation/help

The most comprehensive (and only) application using Elm Bootstrap at the time of writing this is the user documention site http://elm-bootstrap.info. You can find the source for the site application on github too. In time this will improve a lot. With the introduction of Ellie we now also have a great way to share interactive/editable examples of how to use Elm Bootstrap.

If you need help, there is a #elm-bootstrap channel on the Elm slack where you can ask for help. I’ll try to help when I can and hopefully others can help out there going forward too.

Built on top of Twitter Bootstrap version 4

Twitter Bootstrap is one of the most popular CSS (with some JS) frameworks for building responsive, mobile first web sites. At the time of writing version 4 is in alpha-6 and apparantly the plan is to move into beta fairly soon. Version 4 is fully embracing flexbox, which will provide much better control and flexibility.

Creating a wrapper for Twitter Bootstrap probably doesn’t score very high on the hipster scale. However it’s no denying it’s still very popular and probably will be for some time to come. More importantly I’m using it in projects and have done so several times in the past, so I know it would be useful to me when I get a chance to work on an Elm project. Hopefully others will find Elm Bootstrap useful too.

Reasonably type safe you say ?

What’s reasonable is obviously a matter of opinion. But since it’s an Elm package we’re talking about, the context is that it’s for use in a statically typed language that promotes reliability as a core characteristic. There is also no denying that Elm doesn’t have the most advanced type system out there. But in my humble opinion it’s one of the most approachble ones I’ve come across in terms of statically typed functional languages.

There’s no stopping you from just including the CSS from Bootstrap and start using it with the standard Elm Html functions today. Let’s face it, Twitter Bootstrap is mostly just a whole bunch of classes you apply to relevant elements you compose and voila. But applying a bunch of class strings is quite error prone, and it’s easy to nest elements incorrectly or apply incorrect classes to incorrect elements. Trying to alleviate that to some extent is what I’ve been trying to balance with necessary flexibility when defining the API for Elm Bootstrap.

I’m under no illusions that I’ve found the sweetspot that perfectly balances type safety, flexibility and usability. But given the constraints (the type system in Elm and my relatively short experience with statically typed functional languages), I’m reasonably happy with the API as a starting point. Real life use and feedback will surely help it develop in a direction where more and more people can agree that it really is reasonably type safe !

The development story - aka refactoring galore

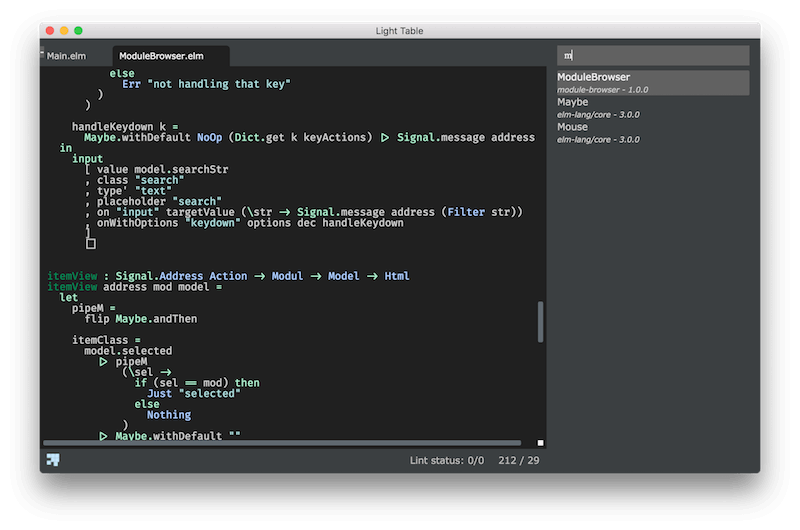

For quite some time my main endevaours in Elm has been developing editor support for Elm in Light Table through my elm-light plugin. I’ve also been working blogging a bit on my journey learning Elm (and a little Haskell). But in November last year I decided I wanted to dive deeper into Elm, trying to make something substantial. Ideally something useful, but first and foremost something that would gain me experience in designing an API for use by others in Elm.

The Bootstrap wrapper idea has crossed my mind several times in the past, but never materialized. I did some research, but couldn’t find anything out there for Elm that was quite as ambitious as I had in mind.

Where to start ?

I first started looking at the very impressive elm-mdl which brings awesome Google Material Design support to Elm. I got a ton of inspiration from this library. Next up I had a look through elm-sortable-table, trying to pick up on good advice and experience for tackling the interactive components in Twitter Bootstrap.

Hmm okay, let’s just start and see where it leads me.

Think, code, refactor ad infinitum

So I started with a couple of modules using a record based api for everthing. That gave me an API that was pretty type safe and certainly explicit. But it looked horribly verbose where in many cases it didn’t provide enough value and even in some cases put way to many restrictions on what you could do. DOH. Back to the drawing board.

I know ! Let’s have 3 list arguments for everything; Options (exposed union types), attributes and children. So I refactored almost everything (silly I know), but it didn’t really feel right with all those lists and I also started to get concerned that users would find it confusing with the std Elm Html functions taking 2 lists. Time to think and refactor again. After that I started to run into cases where I wanted to compose stuff from several modules, well because stuff is related.

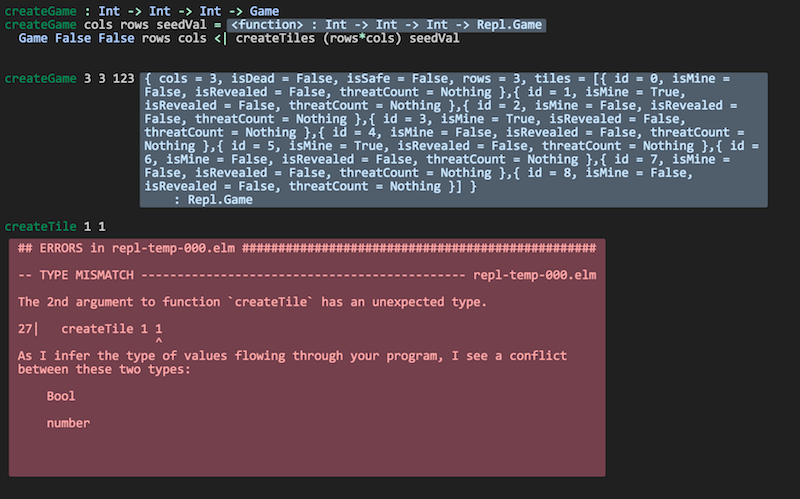

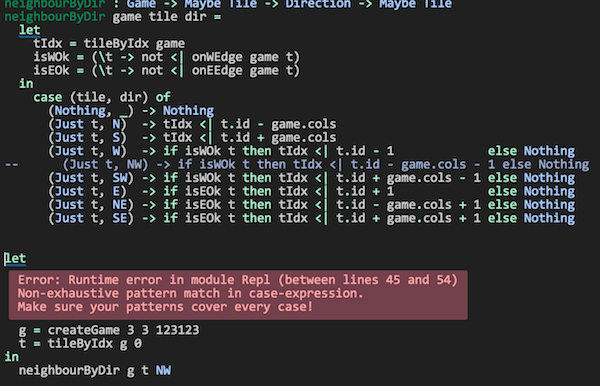

I’ll spare you all the details, but I can’t remember ever having refactored so much code so frequently that I have been during this process. Doing this in Elm has been an absolute pleasure. Truly fearless refactoring. The kind that is really hard to explain to other peope who haven’t experienced it. The Elm compiler and I have become the best of buddies during evenings and nights the past few months.

I can’t remember ever having refactored so much code so frequently that I have been during this process.

Two list arguments

For most elements functions take two list arguments. The first argument is a list of options, the second is a list of child elements. You create options by calling functions defined in the relevant module.

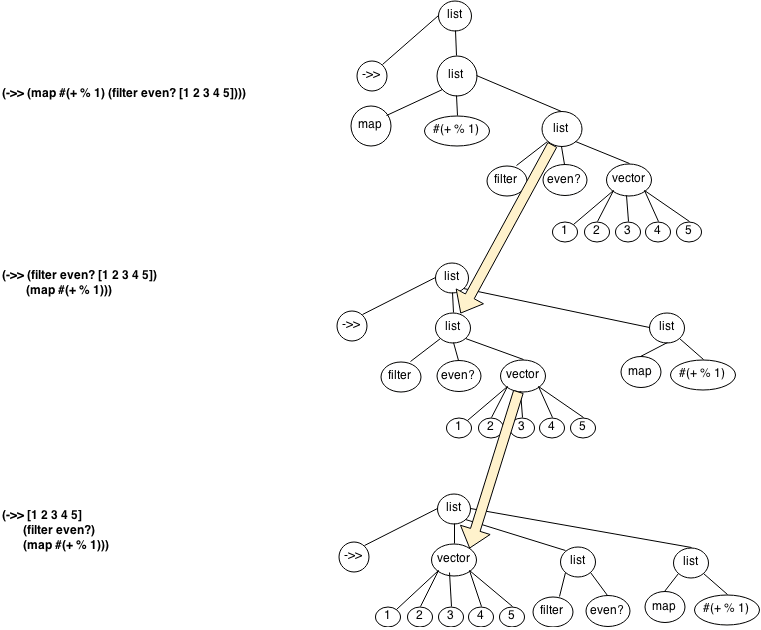

Pipeline friendly composition

Composition of more complex elements is done by calling pipeline friendly functions. This design gives a nice balance between type safety and flexibility.

Reaching out to the Elm Community

In the middle/end of January I reached a point where I on one hand was ready to just ship something. At the same time I was really unsure about what I had created so I reached out for comments on the elm-slack. Turns out that both Mike Onslow and Richard Feldman both have had overlapping ideas about creating a Bootstrap package for Elm. We quickly decided to see if we could cooperate in some fashion and decided to hook up on Google Hangout. Awesome ! We’ve had many really interesting discussions on slack especially related to API design. It’s been really great to have someone to talk to about these things (other than my analysis paralysis brain).

Going forward

I could have been iterating forever trying to nail the best possible API and/or try to support every bit of Twitter Bootstrap, but I’ve decided it’s better to just get it out there and get feedback.

The API will certainly get breaking changes going forward, but I don’t see that as such a big negative given the semantic versioning guarantees and version diffing support provided by the Elm package manager.

I’m hoping folks find this interesting and useful enough to give it a try and give feedback on their experiences. In the mean time I’m going to work on improving the documentation, test support, API consistency and support for missing Twitter Bootstrap features.

Typed up CRUD SPA with Haskell and Elm - Part 6: Elm 0.18 Upgrade

21 November 2016

Tags: haskell elm haskellelmspa

TweetAnother Elm release and it’s time for yet another upgrade post. The changes outlined in the migration guide didn’t look to intimidating, so I jumped into it with pretty high confidence. It took me about 2 hours to get through and it was almost an instant success. The compiler had my back all along, helped by my editor showing errors inline and docs/signatures whenever I was in doubt. I didn’t even have to resort to google once to figure out what to do. I said it almost worked the first time. Well I had managed to add a http header twice which Servant wasn’t to impressed by, but once that was fixed everything was working hunky dory !

Introduction

The Albums app is about 1400 lines of Elm code, so it’s small, but still it might give you some pointers to the effort involved when upgrading. With this upgrade I tried to be semi-structured in my commits so I’ll be referring to them as we go along.

Upgrade steps

Preconditions

-

Install Elm 0.18

-

Install elm-format

Running elm-upgrade

For this release @avh4 and @eeue56 created the very handy elm-upgrade util to ease the upgrade process.

To summarize what elm-upgrade does; It upgrades your project definition (elm-package.json) and it runs elm-format on your code in "upgrade mode" so that most of the syntax changes in core is fixed.

|

It worked great ! Only snag I had was that it failed to upgrade elm-community/json-extra, but hey that was simple enough for me to do afterwords. Here you can see the resulting diff. |

Service API - Http and Json changes

Changing a simple get request

| 0.18 | 0.17 | ||||||

|---|---|---|---|---|---|---|---|

|

|

| If you wish to keep the old behavior, you can convert a request to a task using toTask |

Changing a post request

| 0.18 | 0.17 | ||

|---|---|---|---|

|

|

Changing a put request

| 0.18 | 0.17 | ||||||

|---|---|---|---|---|---|---|---|

|

|

Changing Json Decoding

| 0.18 | 0.17 | ||||

|---|---|---|---|---|---|

|

|

| You can view the complete diff for the Service Api here. (Please note that the headers for the put request should not be there, fixed in another commit) |

Handling the Service API changes

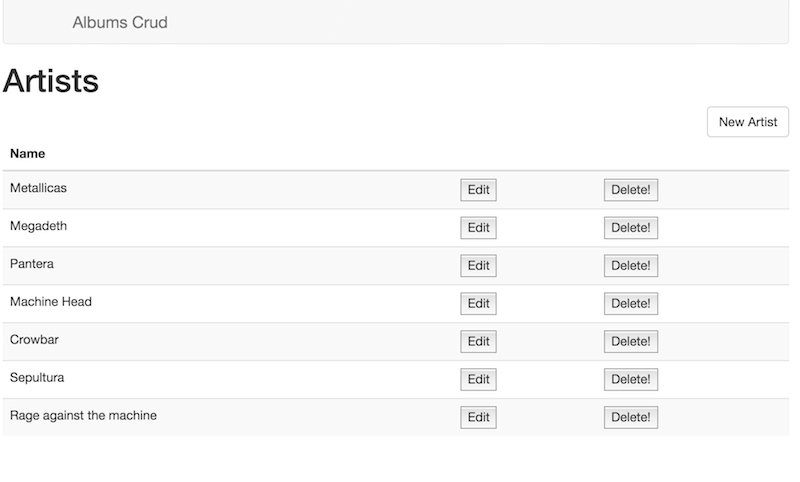

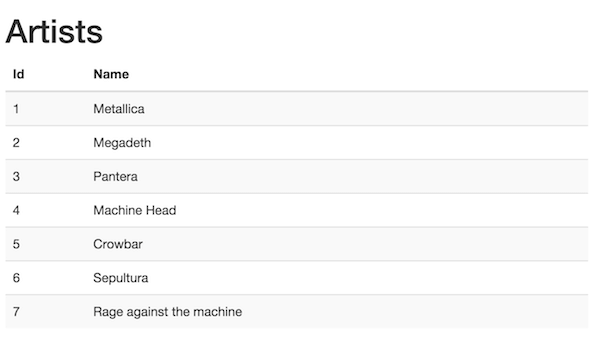

We’ll use the artist listing page as an example for handling the api changes. The big change is really that the messages have changed signature and we can remove a few.

Msg type changes

| 0.18 | 0.17 | ||

|---|---|---|---|

|

|

Changes to the update function

| 0.18 | 0.17 | ||||

|---|---|---|---|---|---|

|

|

|

The diffs for the various pages can be found here: |

Handling changes to url-parser

The url-parser package has had a few changes. Let’s have a closer look

| 0.18 | 0.17 | ||||||

|---|---|---|---|---|---|---|---|

|

|

Handling changes to Navigation in Main

Changing the main function

| 0.18 | 0.17 | ||||||

|---|---|---|---|---|---|---|---|

|

|

Changing the init function

| 0.18 | 0.17 |

|---|---|

We get the initial url passed as a Location to the init function. We just delegate to the update function to handle the url to load the appropriate page. |

|

Changing the main update function

| 0.18 | 0.17 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

| You can see the complete diff here |

Summary

Obviosuly there were quite a few changes, but none of the were really that big and to my mind all of the changed things for the better. Using elm-upgrade and the upgrade feature in elm-format really helped kick-start the conversion, I have great hopes for this getting even better in the future.

I haven’t covered the re-introduction of the debugger in elm-reactor, which was the big new feature in Elm 0.18.

In addition to Elm 0.18 being a nice incremental improvement, it has been great to see that the community has really worked hard to upgrade packages and helping out making the upgrade as smooth as possible. Great stuff !

| A little mind-you that even though this simple app was easy to upgrade that might not be the case for you. But stories I’ve heard so far has a similar ring to them. I guess the biggest hurdle for upgrading is dependending on lot’s of third-party packages that might take some time before being upgraded to 0.18. Some patience might be needed. |

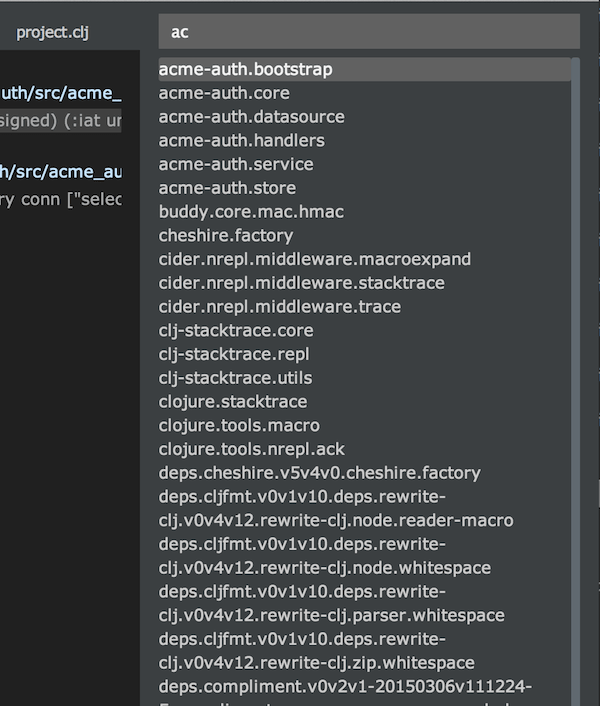

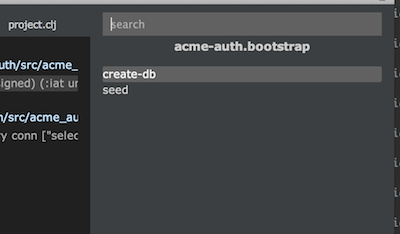

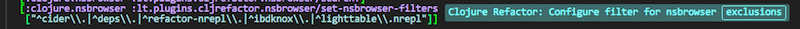

Elm Light 0.4.0 - AST driven Elm features in Light Table using PEG.js

15 September 2016

Tags: elm clojurescript javascript pegjs lighttable

TweetVersion 0.4.0 marks the first version of Elm Light that uses ASTs to enable more advanced IDE like features. This version includes features like; find usages, jump to definition, context aware auto-completer and some simple refactorings. It’s early days, but I’m in no doubt it will enable some pretty cool features going forward.

Evan Czaplicki the author of Elm has told the community on several occations not to block on something not being available from Elm. I’ll have to admit that I’ve been hoping for more tooling hooks from Elm for quite some time, an offical AST coupled with the Elm compiler would be super sweet. It’s definitely on the roadmap, but not a high priority for Elm (right now). My best bet would be to wait for the AST work put into elm-format to be made available. That might actually not be to far off. But several weeks ago I decided I wanted to give it a shot to do something simplified on my own. Mainly as a learning experience, but also to gather data for use cases that an AST can support and to learn a bit about parsing.

You’ll find a demo of the new features added in version 0.4.0 below. The rest of this post gives a brief description of my journey to create a parser and how I integrated that into the plugin.

| You can find the elm-light plugin here |

Demo of 0.4.0 Features

Creating a parser

Researching

It actually started a while back when I bought a book about parsers. It was almost 1000 pages. It turned out to be very uninspiring bed time reading. I guess I wasn’t motivated enough.

My only other experience with parsing since my University days was the stuff I did when porting rewrite-clj to ClojureScript. That ended up becoming rewrite-cljs, which I’ve used for some othere Light Table plugins I’ve created. But the syntax of Clojure is comparatively simple and also I did a port, so I can’t really claim any credits for the actual parsing anyways.

In the Clojure world I’ve used InstaParse which is a really neat library to build parsers. It also has a ClojureScript port, which I though would be good fit for Light Table. I found an old BNF for Elm called elm-spoofax, so I thought. Let’s give it a go. I spent a good week or so to get something that seemed to parse most Elm files I threw at it and provided a tree of nodes which looked fairly decent to work with. However I hadn’t read the README for the CLJs port that will and hadn’t really reflected on what an order of magnitude slower that it’s Clojure big brother actually meant. With a couple of hundred lines I started seeing parse-times nearing a second. I’m sure it could be optimized and tuned somewhat, but it was way off the mark of what I was going to need for continuos as you type parsing.

Back to the drawing board. I started looking at a ton of alternatives. Parser generators and parser combinators etc etc.

Enter PEG.js

After trying out a few parser generators I came across PEG.js. It looked approachable enough to me and they even had a nice online tool. So I set out on my way and decided to keep it simple. Just parse top level definitions. Spent a few days to get an initial version up and running. It was time to give it a performance test. YAY, for most files I got < 10ms parse times for some quite big ones (thousands of lines) I started seeing 100ms parse times. It still seemed worth pursuing. So I did !

| PEG.js is a simple parser generator. It supports a syntax that is BNF like, but you can smatter it with some JavaScript when appropriate. It also has nice error reporting and a few other nifty features. |

module (1)

= declaration:moduledeclaration EOS

LAYOUT

imports:imports?

LAYOUT

toplevel:topLevelDeclarations?

LAYOUT

{

return {

moduledeclaration: declaration,

imports: imports,

declarations: toplevel

}

}

moduledeclaration (2)

= type:(type:("effect" / "port") __ { return type; })? "module" __ name:upperIds __ exposing:exposing

{

return {

type: (type||"" + " module").trim(),

name: name,

exposing: exposing

};

}

// .. etc| 1 | The top level rule. It sort of looks like BNF, but you’ll also notice some JavaScript |

| 2 | The rule for parsing the module declaration, which again uses other rules, which again … |

I basically used a process of looking at this old Elm BNF as inspiration and then adjusting along the way. The PEG.js online tool was really helpful during this work.

|

Why a JavaScript parser generator ?

Well Light Table is based on Electron. So it’s basically a node server with a browser client build in. Having a parser that plays seemlessly with the basic building blocks of the browser is both convenient and practical in terms of distribution. I can just require the parser as a node module and off we go. The second reason is that for example my Haskell foo is not up to scratch. I would love to do it in Elm but current Elm combinator libraries just doesn’t provide enough building blocks for me to see this as a competive or realistic alternative quite yet. |

Designing for As You Type Parsing (AYTP ?)

The general idea I had was to design with the following in mind - Parsing everything (including 3.rd party packages) when connecting, is a bearable price to pay to ensure everything is hunky dory and good to go once you are connected - The design should support file changes not only from actions in the editor, but also from any outside process - Things generally have to be asynchronous to ensure the Editor stays responsive at all times - Only introduce (persistent) caching if there is no way around it

Listening for changes

To support parsing whenever a file changes or whenever you install or remove a package in your Elm projects I opted for using Chokidar. Elmjutsu - an excellent Elm plugin for Atom provided me with the inspiration here.

Each Elm project in Light Table will get it’s own node process running Chokidar. Whenever the appropriate events are fired, it will parse the file(s) needed and notify the Elm plugin editor process with the results.

var watcher = chokidar.watch(['elm-package.json', (1)

'elm-stuff/exact-dependencies.json',

'**/*.elm'], {

cwd: process.cwd(),

persistent: true,

ignoreInitial: false,

followSymlinks: false,

atomic: false

});

watcher.on("raw", function(event, file, details) { (2)

var relFile = path.relative(process.cwd(), file);

var sourceDirs = getSourceDirs(process.cwd());

if(relFile === "elm-stuff/exact-dependencies.json") {

if ( event === "modified") {

parseAllPackageSources(); (3)

}

if (event === "deleted") {

sendAstMsg({

type: "packagesDeleted"

});

}

}

if (isSourceFile(sourceDirs, file) && event === "modified") {

parseAndSend(file); (4)

}

if (isSourceFile(sourceDirs, file) && event === "deleted") {

sendAstMsg({

file: file,

type: "deleted"

});

}

if (isSourceFile(sourceDirs, file) && event === "moved") {

if (fileExists(file)) {

parseAndSend(file);

} else {

sendAstMsg({

file: file,

type: "deleted"

});

}

}

});

elmGlobals.watcher = watcher;

}| 1 | Start the watcher |

| 2 | To be able to handle renames and a few othere edge cases I ended listening for raw avents from Chokidar |

| 3 | Whenever this elm file changes is very likely that’s due to a package install, update or delete of some kind The time spent for parsing all package sources is proportionally small compared to the time spent on a package install so this "brute-force" approach actually works fine. |

| 4 | Parsing a single file on change and notifying the editor process with the results is the common case |

Caching the ASTs

In the Elm Light plugin Editor part, a Clojure(Script) atom is used to store all projects and their ASTs. Not only does it store AST’s for you project files, but it also stores ASTs for any 3.rd party packages your project depends on. That means that it does use quite a bit of memory, but profiling sugggest it’s not too bad actually. The great thing now is, that I have a Clojure datastructure I can work with. Slice and dice, transform and do all kinds of stuff with using the full power of the clojure.core API. Super powerful and so much fun too :-)

But what about this parsing as you type then ?

Well for every open Elm editor, there is a handler for parsing the editors content and update the AST atom. Again the actually parsing is performed in a node client process, otherwise the editor would obviously have ground to a halt.

(behavior ::elm-parse-editor-on-change (1)

:desc "Parse a connected elm editor on content change"

:triggers #{:change}

:debounce 200 (2)

:reaction (fn [ed]

(object/raise ed :elm.parse.editor))) (3)

(behavior ::elm-parse-editor (4)

:desc "Initiate parsing of the content/elm code of the given editor"

:triggers #{:elm.parse.editor :focus :project-connected }

:reaction (fn [ed]

(when (not (str-contains (-> @ed :info :path) "elm-stuff"))

(let [client (get-eval-client-if-connected ed :editor.elm.ast.parsetext)

path (-> @ed :info :path)]

(when (and client

(= (pool/last-active) ed))

(clients/send client (5)

:editor.elm.ast.parsetext

{:code (editor/->val ed)}

:only ed))))))

(behavior ::elm-parse-editor-result (6)

:desc "Handle parse results for a parsed editors content"

:triggers #{:editor.elm.ast.parsetext.result}

:reaction (fn [ed res]

(if-let [error (:error res)]

(do

(object/update! ed [:ast-status] assoc :status :error :error error)

(object/raise ed :elm.gutter.refresh))

(let [path (-> @ed :info :path)]

(object/update! ed [:ast-status] assoc :status :ok :error nil)

(elm-ast/upsert-ast! (-> (get-editor-client ed) deref :dir) (7)

{:file path

:ast (:ast res)})

(object/raise ed :elm.gutter.exposeds.mark)))

(elm-ast/update-status-for-editor ed)))| 1 | This the behaviour (think runtime configurable event handler) that triggers parsing whenever the editor contents change. |

| 2 | Parsing all the time is not really necessary for most things, so a debounce has been defined to not spam the node client |

| 3 | We delegate to the behaviour below which is a more generic trigger parsing behavior |

| 4 | This behavior is responsible for sending off a parse request to the node client |

| 5 | We send the parse request to the node client |

| 6 | Once the node client process has finished parsing this behviour will be triggered with the result |

| 7 | We update the AST atom with the AST for this particular combination of project and file represented by the editor |

| We only update the AST on succesful parses. A lot of the time when typing the editor contents will naturally not be in a correct state for parsing. We always keep track of the last valid state, so that allows the plugin to still provide features that doesn’t necessarily need an completely current AST. |

There is always an exception

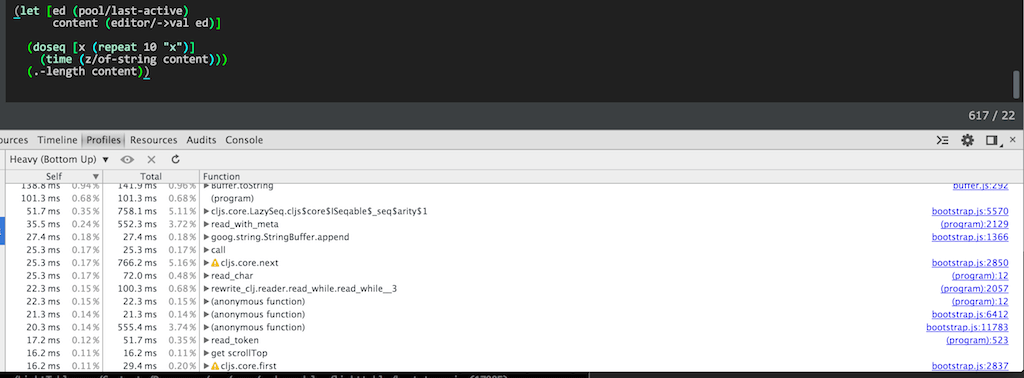

Things was working quite well initially, managed to get several features up and running. But when I started to rewrite the auto completer from using elm-oracle I hit a few killer problems; - The contiuous parsing started to tax the editor to the point that things became unusable - With debouncing I didn’t have accurate enough results to provide a proper context for context aware completions - I discovered general performance problems in how I’ve written my ClojureScript code - For large files synchrounous parsing was out of the question

Auto completers are tricky and doing it synchronous was proving useless for Elm files larger than a few hundred lines. Back to the drawing board.

Tuning

So providing hints for the autocompleter definitely has to happen asynchronously. But even that was to taxing for larger files and AST. So I spent quite some time optimizing the ClojureScript code. Turning to JavaScript native when that was called for. Heck I even threw in memoization a couple of places to get response times down. Even turning JSON into EDN (clojure data format) had to be tweaked to become performant enough. The whole process was quite challenging and fun. There are still things to be tuned, but I’ll wait and see what real usage experience provides in terms of cases worth optimizing for.

Partial synchronous partial parsing

The autocompleter is async, but for some cases it turned out to be feasible to do a partial parse of the editors contents. PEG.js has a feature to support multiple start rules, so I ended up defining a start rule that only parses the module declaration and any imports. That allowed the context sensitive hints for module declartions and imports to have a completely up to date AST (well as long as it’s valid) and at the same time keep the autocompleter responsive enough.

Really large files

Depending on who you ask, you might get a different definition, but to me Elm files that are several thousand lines are large. So hopefully they are more the exception than the rule. But for files of that size the autocompleter will be a little slugish. Not too bad (on my machine!), but you will notice it.

| If you experience this, do let me know. And also be aware that turning off the auto-completer is deffo and option and easy for you to do. The guide contains instructions for how to do that. |

Refactoring

It would be really neat if I could refactor in the AST itself and just "print" the update result back to the editor. However with the complexities of the AST already, the fact that I’m not even parsing everything yet and all interesing challenges with an indentation sensitive language with lot’s of flexibility in terms of comments and whitespace… Well that’ll have to be a future enterprise.

That’s not entirly true though. For a couple of the features I sort of do that, but only for a select few nodes of the AST, and the change is not persited to the AST atom (think global database of ASTs). So it’s like a one-way dataflow:

-

get necessary nodes from AST atom

-

update the node(s)

-

print to editor

-

editor change triggers AST parsing for editor

-

node client notifies editor behaviour responsible for updating the AST atom

-

AST Atom gets updated

-

The AST atom is up to date, but slightly after the editor

(behavior ::elm-expose-top-level

:desc "Behavior to expose top level Elm declaration"

:triggers #{:elm.expose.top.level}

:reaction (fn [ed]

(let [path (-> @ed :info :path)

prj-path (project-path path)

module (elm-ast/get-module-ast prj-path path) (1)

exposing (-> module :ast :moduleDeclaration :exposing)] (2)

(when-let [decl (elm-ast/find-top-level-declaration-by-pos (3)

(editor/->cursor ed)

module)]

(when-not (elm-ast/exposed-by-module? module (:value decl))

(let [{:keys [start end]} (elm-ast/->range (:location exposing))

upd-exp (elm-ast/expose-decl decl exposing) (4)

pos (editor/->cursor ed)

bm (editor/bookmark ed pos)]

(editor/replace ed (5)

start

end

(elm-ast/print-exposing upd-exp))

(safe-move-cursor ed bm pos)))))))| 1 | Get the AST root node for the module the current editor represents |

| 2 | From that retrieve the exposing node (this is the one we want to update) |

| 3 | Find the declaration to expose based on where the cursor is placed in the editor |

| 4 | Update the exposing AST node to also expose the given declaration in <3> |

| 5 | Overwrite the exposing node in the editor, that works because we have the current location of it already :-) |

Once the editor is changed, the normal process for updating the global AST atom is triggered.

Summary and going forward

Writing a parser (with the help of a parser generator) has been a really valuable learning experience. After my failed attempt with InstaParse, it’s hard to describe the feeling I had when I saw the numbers from my PEG.js based implementation. I tried to talk to my wife about it, but she couldn’t really see what the fuzz was all about !

I’ll continue to make the parser better, but the plan isn’t to spend massive amounts of time on making that perfect. I’d rather turn my attention on trying to help the Elm community and it’s tooling people access to an AST on stereoids. My bet is that the AST from elm-format is going to be the way forward, so I’ll try to help out here. Hopefully my own experience will be useful in this process.

I’m pretty sure I can carry on to make some pretty cool features with the AST i already have, so there will defininetely be some cool stuff coming in Elm Light in the near future regardless of what happens in the AST space and tooling hooks for Elm in general.

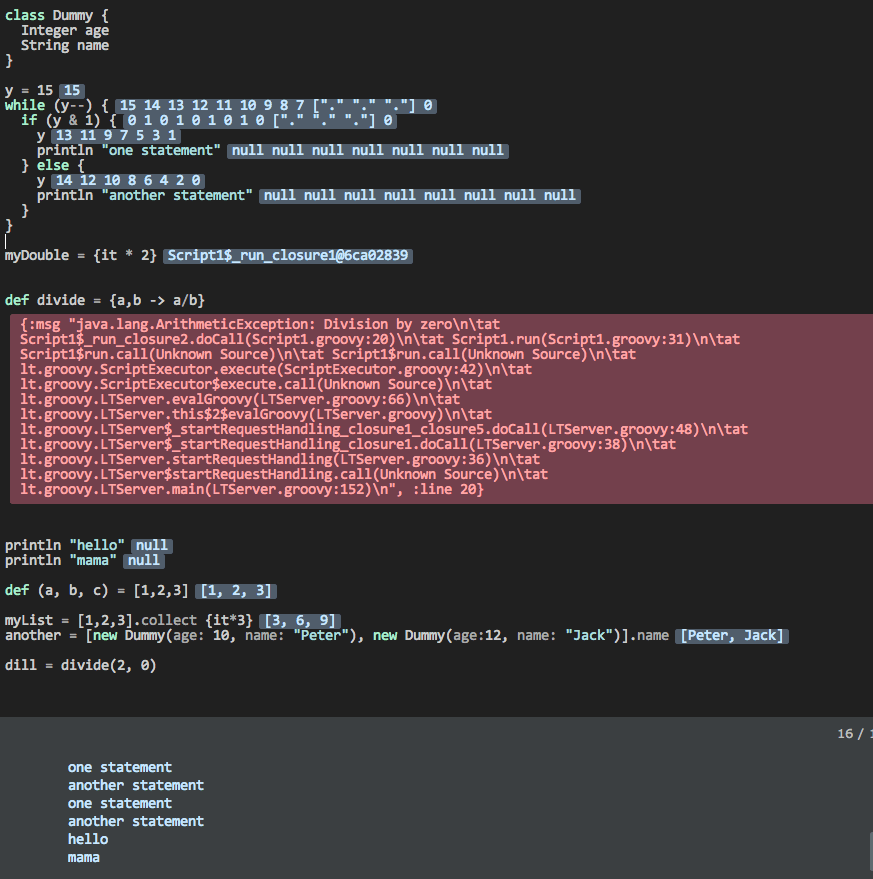

Oh no! Learning Elm has gotten me confused about static vs dynamic typing.

13 June 2016

Tags: elm haskell groovy clojure

TweetThe last few years I’ve worked on projects using Groovy and Clojure/ClojureScript. In 2016 I’ve spent quite a bit of pastime trying to get to grips with statically typed functional languages. In particular I’ve deep dived into Elm. I’ve also dipped my toes into Haskell. It’s been a great learning experience and has changed my view of static typing. Does this mean I’m a static typing zealot now ? Nah, but there is no doubt I’m much more open to the benefits of static typing. I guess would characterize myself more like undecided or confused than convinced either way.

Background

A couple of years ago I started working with Groovy (and Grails). I fell in love and felt I became way more productive than I had ever been when working with Java. I rarely missed the added type safety of Java. Groovy also gave me a gentle introduction to functional programming related concepts in a way Java never encouraged me to.

In 2014 I started dabbling with Clojure(Script), but it took until 2015 before I got a chance to use it for real in a project. It was a blast and I finally started to understand why more and more people are turning towards functional programming. Clojure/ClojureScript became a big part of my life both at work and evenings and nights. I was hooked.

At the end of last year I was back on a Groovy/Grails project. I was perfectly ok with that, but it wasn’t a shiny new thing any longer, so I guess that’s partially why I was looking for something new to learn on the side. Elm really caught my attention. When I watched Let’s be mainstream! User focused design in Elm I finally found a statically typed functional language that looked approachable to me.

First signs of functional immutable damage

My time with Clojure (and gradually Elm) had changed me. I started to change how I coded Groovy. I tried to be more functional and whenever I declared a variable that I later mutated I was left feeling dirty somehow. It’s hard to try to enforce immutability in Groovy when the language, libraries and idioms don’t make immutability a first class citizen. I had to bite the bullet quite a few times, and yeah I could still get things done. The thing is, I started to pay more attention to what kind of errors and bugs I introduced as a result of careless mutation.

One particular example springs to mind. I was doing validation of a master/detail form. To validate the detail rows of the form I needed to make sure they were sorted.

master.detailRows.sort {it.date}However this had the nasty side-effect

of reordering the detail rows in my UI which was rendered from this object.

I was puzzled at first, but then I remembered that immutability is not something you

can take for granted in the Groovy collection API (some things are, others are just bolted on top of Java).

The fix was easy enough. collection.sort has an overloaded version that takes a boolean parameter mutate.

master.details.sort(false) {it.date}My eyes hurt: sort false, but but I do want to sort.

2 years ago I wouldn’t think much of this, it’s just a thing I was used to deal with and spend cycles on.

Now I get annoyed both with myself for forgetting and the language for making me feel dumb for not remembering.

Adding types

After having spent some time with Elm (and Haskell) I noticed I started to add more type information in my Groovy code. I felt it improved the readability of my functions and methods. It also made IntelliJ more happy and helpful in many cases. The frontend of the application is written in JavaScript with a fair chunk of jQuery. Introducing something like Flow might be helpful, but I’m convinced there are other more fundemental issues that needs to be addressed before considering that.

I’m pretty sure I’ll be using something like Schema more actively when writing Clojure/ClojureScript going forward. When I have the chance Clojure Spec will probably be the preferred option. I know it’s not static typing, but my hunch is that it will have a huge positive impact on documentation, error messages, runtime checks, testing and probably many other things too.

Functionally dynamic, but statically aware

This week I was back to a Clojure/ClojureScript project again. I’m quite excited and I’m convinced I’m going to have a blast. However I’ve decided to use this opportunity to reflect more on where I feel Clojure/ClojureScript with it’s dynamic typing shines and where I think static typing might have been helpful. After spending so much time with Elm and very much enjoying it, I might be susceptible to confirmation bias that static typing only carries benefits. I’m going to try real hard to stay as objective or rather true to myself as I can when reflecting on positives of static vs dynamic. Of course there’s a lot more to languages than static vs dynamic typing. I do find it interesting to reflect about it though, especially since so many people seem to have such strong opinions about type safety. I myself am mostly confused or largely undecided at the moment.

First week reflections

| Undoubtably with some Elm tinted glasses |

-

Figwheel how I’ve missed you ! With the latest release, error messages has gotten way better too. Maybe Elm with it’s suberb error messages has been an inspiration ?

-

I haven’t gotten my tooling set up right yet, but know that once I’ve got the REPL stuff set up right I’m going to be a happier puppy

-

The app is really cool, and there are lots of exciting techs to dig into

-

Paredit rocks for editing

-

re-frame - When I first read about it several months ago I was initially convinced that this small but very powerful framework was a fantastic thing. Maybe it really is to. But having experienced Elms departure from FRP, I’m wondering whether it might have some drawbacks at scale that I am not aware of yet. I’ve barely gotten a chance to work with it, but I’ve so far found it hard to internalize all the reactive dataflows going on in the client app. I obviously need to spend more time before making any judgment. Maybe I’ll write a blog post comparing The Elm Architecture to re-frame in the future.

-

I genuinly found it harder than before to understand what various functions did by looking at their signature. That could very well be down to naming and lack of documentation and or something like schema, but it was initially frustrating to see functions with map parameters and having to read through the whole of the implementation to get an understanding of what they might contain.

printlnto the rescue… sort of. -

I made silly mistakes, some of these resulted in things just not happening and others resulted in stacktraces that wasn’t helpful in anyway at all. I can’t help but think about the fact that static types and a helpful compiler would have prevented me from making many of those mistakes. Sure I should have tested more, both through the use of the REPL and probably more proper tests too.

-

I was faced with a few refactorings, that didn’t really go as well as I feel it should have. Again more tests would have helped, but then again a lot of those tests I just wouldn’t have needed to write in Elm.

An attempt to summarize

I’m convinced that functional programming vs imperative programming is a much more important concern than static vs dynamic typing. I’m also in no doubt that I think functional programming is by far superior. A year ago I was solidly in the dynamic typing camp. My impression of statically typed functional languages was that they were way to hard to get started with. None of the languages I had heard about seemed particularily approachable and I had doubts about their practicality for the problems I typically encounter in my projects. I’ve tried Haskell a couple of times, but I guess I was never commited enough. Learning Elm has not only been great fun, but It has clearly opened my mind to the possibility that static type checking can be very beneficial. It’s hard to describe the experience of doing a major refactoring, having a really helpful and friendly compiler guide you along step by step and when finally everything compiles it just works. Having had that experience many times with Elm (and to a degree Haskell) certainly changed something fundementally in my thinking.

Until I have used Elm, Haskell or another statically typed functional language in a real project I’m in no position to pass any proper (personal) judgement. Maybe I’m just going to remain confused, or maybe I’m never going to have a particularily strong preference.

Typed up CRUD SPA with Haskell and Elm - Part 5: Elm 0.17 Upgrade

30 May 2016

Tags: haskell elm haskellelmspa

TweetElm version 0.17 was released a few weeks back. If haven’t already, you should read the annoucement post A Farewell to FRP. So what does that mean for the Albums app ? Sounds like we’re in for a massive rewrite. It turns out, since we were already using The Elm Architecture to structure our application, the impact isn’t that big after all. Most of it is mechanical, and actually the biggest change is that we can no longer use the 3.rd party routing library we depended on.

Introduction

I would have done the upgrade to 0.17 sooner, but the Album application depended on a 3rd party package called elm-transit-router. It served us well and we even got some nice transition animations when changing pages. However as all the routing libraries that we’re available for 0.16, it depended on a community package called elm-history. That package was never going to updated to support 0.17, in fact all support for the Web Platform APIs will eventually supported by the Elm language.

Last week Navigation was announced. This is library for managing navigation in a SPA. It provides nice abstractions over the History API. In tandem Evan released URL Parser which is a simple parser for turning URLs into structured data.

With that in place I felt confident we should be able to do the upgrade. Let’s walk through the highlights !

Upgrade steps

Upgrading packages

| 0.17 | 0.16 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

Mechanical changes

Module declarations

In 0.16 we had

module Main (..) whereIn 0.17 we have

module Main exposing (..)Luckily Elm Format handles this conversion automatically for us when we do format of an 0.16 .elm file !

So we can just run elm-format on the src directory.

Effects are now named Cmd

The new name for Effects are now Cmd shorthand for Command. Cmd is part of elm-lang/core

and lives in the Platform.Cmd module.

| 0.17 | 0.16 | ||||

|---|---|---|---|---|---|

|

|

Making these changes is also fairly trivial with a good old search/replace.

Mailbox and address are gone in 0.17

| 0.17 | 0.16 |

|---|---|

|

|

-

The address parameter is gone, you no longer need to concern yourself with the intricacies of mailboxes. But you’ll also notice that the return value type

Htmltakes a tag which in this case is ourMsgtype. So if we have any event handlers in our view code, we are telling it that those should result in a message of typeMsg. We’ll come back to this in a bit more detail when we go through a nesting example. -

We no longer need to deal with an address for our event handler, we just tell Elm that when the user clicks the button, it should trigger our update function with the given

MsgSomeMsg. The Elm runtime will take care of routing the message to our update function without any address mumbojumbo !

Again making this change is largely a matter of search/replace. There are a few exceptions though.

| 0.17 | 0.16 |

|---|---|

|

|

But let’s say you actually do need a custom decoder it would still be simpler than in 0.16

import Json.Decode as Json

-- ...

[ input

[ class "form-control"

, value model.name

, on "input" (Json.map SetArtistName targetValue) (1)

]

[]

]| 1 | Here we just map over the targetValue, and call SetArtistName with the value. targetValue is a Json decoder which picks out the value from our input field when the event is triggered |

Routes and Route parsing (ehh… URLs if you like)

| 0.17 | 0.16 | ||||

|---|---|---|---|---|---|

|

|

| 0.17 (url-parser) | 0.16 (elm-route-parser) |

|---|---|

|

|

The parsing syntax is slightly different, but the transition was fairly trivial in our case. The observant reader will notice that we’ve skipped over the case when there is not matching route. We’ll get back to that when we wire it all together. Also, we’ll see later where our decode function comes into play when we wire up the app.

| To learn more about the new url-parser and it’s functions check out the package docs. |

encode : Route -> String

encode route =

case route of

Home ->

"/"

ArtistListingPage ->

"/artists"

NewArtistPage ->

"/artists/new"

ArtistDetailPage i ->

"/artists/" ++ toString i

AlbumDetailPage i ->

"/albums/" ++ toString i

NewArtistAlbumPage i ->

"/artists/" ++ (toString i) ++ "/albums/new"Encoding routes is pretty much exactly the same as before.

| 0.17 (url-parser) | 0.16 (elm-route-parser) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

I borrowed most of this code from The tacks application from @etaque. Kudos to @etaque for coming up with this !

You might be wondering why we need catchNavigationClicks at all ? Well if you click on a href, the browser will (to my knowledge) change the window location

and trigger a server request which causes the page to reload. In an SPA we typically don’t want that to happen.

|

Please be advised that these helpers do make a compromise in terms of type safety. Note in particular the use of msg (basically anything) rather than a component specific Msg type.

I’m sure in due time, more type safe patterns will emerge. An obvious alternative to this approach is to

have a custom message in each update function that handles navigation. I’m going to try that out in the near future and see how it plays out.

|

Sample usage

Let’s have a quick look at a few examples on how we are using the navigate and linkTo helper functions

in the Albums app. How it all fits together will hopefully be apparent when we describe how we wire everything together in our Main module a little later on

| 0.17 | 0.16 | ||||

|---|---|---|---|---|---|

|

|

| 0.17 | 0.16 | ||

|---|---|---|---|

|

|

Dealing with Http

So in our Album app we separated all HTTP requests to a separate module we called ServerApi.

The changes from 0.16 to 0.17 isn’t massive, but since we’re at it we might as well make some small improvements

to be better prepared for error handling in future episodes.

| 0.17 | 0.16 |

|---|---|

|

|

The http methods haven’t really changed, but the manner in which we request the runtime to perform them have changed.

We no longer have the Effects package, so we need to use Task.perform to do it now. Our 0.16 implementation used

Maybe to signal success or failure, in 0.17 we have opted to give a different message for success or failure.

So if getArtist fails the error result of or http action will be passed to our update function wrapped in the provided Msg given by our errorMsg param,

if it succeeds the response will be json decoded and passed to our update function wrapped in the provided Msg given by our msg param.

| Separating out all our http requests in one module gives flexibility in usage from multiple modules, but comes with a price of reduced type safety though. You might (depending on context of course) want to localize http stuff with your components to make them more self-contained. |

Usage Comparison

update : Action -> Model -> ( Model, Effects Action )

update action model =

case action of

NoOp ->

( model, Effects.none )

GetAlbum id ->

( model

, Effects.batch

[ getAlbum id ShowAlbum

, getArtists HandleArtistsRetrieved

]

)

ShowAlbum maybeAlbum ->

case maybeAlbum of

Just album ->

( createAlbumModel model album, Effects.none )

Nothing -> -- TODO: This could be an error if returned from api !

( maybeAddPristine model, getArtists HandleArtistsRetrieved )

HandleArtistsRetrieved xs ->

( { model | artists = (Maybe.withDefault [] xs) }

, Effects.none

)Our use of Maybe to signal failure in our 0.16 implementation clearly muddles what’s going on in terms of potential failures.

mountAlbumCmd : Int -> Cmd Msg (1)

mountAlbumCmd id =

Cmd.batch

[ getAlbum id FetchAlbumFailed ShowAlbum

, getArtists FetchArtistsFailed HandleArtistsRetrieved

]

mountNewAlbumCmd : Cmd Msg (2)

mountNewAlbumCmd =

getArtists FetchArtistsFailed HandleArtistsRetrieved

update : Msg -> Model -> ( Model, Cmd Msg )

update msg model =

case msg of

-- TODO: show error

FetchAlbumFailed err -> (3)

( model, Cmd.none )

ShowAlbum album -> (4)

( createAlbumModel model album, Cmd.none )

HandleArtistsRetrieved artists' ->

( { model | artists = artists' }

, Cmd.none

)

-- TODO: show error

FetchArtistsFailed err ->

( model, Cmd.none )

-- rest left out for brevity| 1 | This command has been separated out as an exposed function for the module. The reason is that we need to perform this when we navigate to a `/albums/<id>. I.e when that particular url is mounted. You’ll see how when we cover the Main module. We are actually running two http requests here.. hopefully/presumably in the order they are listed :-) |

| 2 | Similar to the above, but this is for handling when the user navigates to the url for creating a new album |

| 3 | if getAlbum should fail this is where we should handle that (And we will eventually in a future episode) |

| 4 | If getAlbum succeeds we set the model up for displaying the retrieved artist |

Nesting Components

The way you handle nesting of components in 0.17 has changed (for the better) with the removal of Mailboxes. If you didn’t do to much fancy stuff with addresses the transition to 0.17 should be quite straight forward. We’ll illustrate by showing a simple/common transition and then we will show how you might handle a more complex nesting scenario (based on actual examples from the Albums App)

The common scenario

| 0.17 | 0.16 | ||

|---|---|---|---|

|

|

I think you’ll agree this change is pretty simple to deal with. Let’s see how nesting of view functions for components have changed

| 0.17 | 0.16 | ||||||

|---|---|---|---|---|---|---|---|

|

|

This change isn’t quite search/replace (well with regex perhaps), but it’s quite trivial too. Ok let’s move onto something a bit more complex.

A more complex scenario - Album and tracks

If you wish to see the Album and Tracks solution in action, you can check it out here:

| 0.17 | 0.16 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

There is no magic involved here, we are just returning an additional piece of info in the return value of our update function.

| 0.17 | 0.16 | ||||||

|---|---|---|---|---|---|---|---|

|

|

update : Action -> Model -> ( Model, Effects Action )

update action model =

case action of

-- ...

RemoveTrack id ->

( { model \| tracks = List.filter (\( rowId, _ ) -> rowId /= id) model.tracks }

, Effects.none

)

MoveTrackUp id ->

-- ...

MoveTrackDown id ->

-- ...

ModifyTrack id trackRowAction ->

let

updateTrack ( trackId, trackModel ) =

if trackId == id then

( trackId, TrackRow.update trackRowAction trackModel )

else

( trackId, trackModel )

in

( maybeAddPristine { model | tracks = List.map updateTrack model.tracks }

, Effects.none

)update : Msg -> Model -> ( Model, Cmd Msg )

update msg model =

case msg of

-- ...

RemoveTrack id -> (1)

( { model \| tracks = List.filter (\( rowId, _ ) -> rowId /= id) model.tracks

}

, Cmd.none

)

MoveTrackUp id ->

-- ...

MoveTrackDown id ->

-- ...

ModifyTrack id trackRowMsg ->

case (modifyTrack id trackRowMsg model) of (2)

Just ( updModel, Nothing ) -> (3)

( model, Cmd.none )

Just ( updModel, Just dispatchMsg ) -> (4)

handleDispatch id dispatchMsg updModel

_ ->

( model, Cmd.none ) (5)

modifyTrack : TrackRowId -> TrackRow.Msg -> Model -> Maybe ( Model, Maybe TrackRow.DispatchMsg )

modifyTrack id msg model = (6)

ListX.find (\( trackId, _ ) -> id == trackId) model.tracks

\|> Maybe.map (\( _, trackModel ) -> TrackRow.update msg trackModel)

\|> Maybe.map

(\( updTrack, dispatchMsg ) ->

( maybeAddPristine

{ model

\| tracks =

ListX.replaceIf (\( i, _ ) -> i == id)

( id, updTrack )

model.tracks

}

, dispatchMsg

)

)

handleDispatch : TrackRowId -> TrackRow.DispatchMsg -> Model -> ( Model, Cmd Msg )

handleDispatch id msg model = (7)

case msg of

TrackRow.MoveDown ->

update (MoveTrackDown id) model

TrackRow.MoveUp ->

update (MoveTrackUp id) model

TrackRow.Remove ->

update (RemoveTrack id) model| 1 | The parent, ie AlbumDetail, logic for deleting on of it’s track rows. |

| 2 | We delegate updating the track row and consequently the AlbumDetail model to a helper function. We pattern match on the result from that function. |

| 3 | If it was a "normal" update with no dispatch message returned we simply return the updated model and a no op Cmd. |

| 4 | If the update of the track row got a dispatch message in return from TrackRow.update we delegate the handling of the dispatch message to another helper function. |

| 5 | Since we are dealing with Maybe values we have to handle this case, but it really shouldn’t ever happen ! (Famous last words). |

| 6 | This might look a bit scary, but in summary it; locates the correct track row, performs the update of that row by delegating to TrackRow update, updates the track row in the model with the updated track row and finally returns a tuple of the updated model and the dispatch message (which is Maybe you remember). |

| 7 | Here we simply pattern match on the dispatch message and invokes the update function with the appropriate corresponding Msg. |

The pattern we used here is just one of many possible ways of solving this problem. Maybe someday a common preferred pattern will emerge,

but the bottom line is that it will most likely be some variation of return values from update functions and/or input params to the view function in the parent/child communucation.

The days of "magic" juggling with mailboxes are gone. Simple input/output FTW !

Oh, and finally, for this particular case I think there might be a good case for arguing that perhaps remove/moveup/movedown doesn’t really belong in TrackRow at all, it might

actually make more sence to use a decorator-kind of approach instead.

|

| Some flavors of using "global" or dummy effects (using say dummy tasks) for communicating between components have briefly surfaced. Pls think really carefully before adopting such an approach. Have a chat with the nice and very knowledgable people in the community to discuss if there isn’t a better solution for your problem ! |

Wiring it all together in frontend/src/Main.elm

| 0.17 | 0.16 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

| 0.17 | 0.16 | ||

|---|---|---|---|

|

|

I don’t think it’s much point in describing the other slight differences, since they mostly pertain to details about elm-transit-router.

Url updates / Mounting routes

mountRoute : Route -> Route -> Model -> ( Model, Effects Action )

mountRoute prevRoute route model =

case route of

Home ->

( model, Effects.none )

ArtistListingPage ->

( model, Effects.map ArtistListingAction (ServerApi.getArtists ArtistListing.HandleArtistsRetrieved) )

ArtistDetailPage artistId ->

( model

, Effects.map ArtistDetailAction (ServerApi.getArtist artistId ArtistDetail.ShowArtist) )

NewArtistPage ->

( { model | artistDetailModel = ArtistDetail.init }, Effects.none )

-- etc ..

EmptyRoute -> (1)

( model, Effects.none )| 1 | This is how we handled route parse failures in our 0.16 implementation btw. |

urlUpdate : Result String Route -> Model -> ( Model, Cmd Msg )

urlUpdate result model =

case result of

Err _ -> (1)

model ! [ Navigation.modifyUrl (Routes.encode model.route) ]

Ok (ArtistListingPage as route) -> (2)

{ model | route = route }

! [ Cmd.map ArtistListingMsg ArtistListing.mountCmd ]

-- rest left out for brevity

Ok ((NewArtistAlbumPage artistId) as route) -> (3)

{ model

| route = route

, albumDetailModel = AlbumDetail.initForArtist artistId

}

! [ Cmd.map AlbumDetailMsg AlbumDetail.mountNewAlbumCmd ]

Ok route -> (4)

{ model | route = route } ! []| 1 | If url parsing for a new url fails we just change the url back to url for the current route(/page) It might be appropriate to show an error of some sort error. |

| 2 | When the we change url to the artist listing page we wish to initiate the http request for retrieving artists from our backend. That’s where ArtistListing.mountCmd comes into the picture. |

| 3 | In addition to providing an effect, we need to ensure that the albumDetailModel starts with a clean slate when the page for adding a new album is displayed. It might have been a good idea to separate this out to it’s own component to avoid quite a bit of coniditional logic. |

| 4 | For any other url changes we just update the route field in our model. |

|

What’s up with the

|

Just a little more on the main update function, related to navigation

| 0.17 | 0.16 |

|---|---|

The navigate message triggers a call to the Navigation.newUrl function. That will step to a new url and update the browser history. You’ll see in the next chapter were we trigger this message. |

This is a elm-transit-router specific handler that takes care of starting and stopping animation transitions + updating the route field of our model. |

View

| 0.17 | 0.16 | ||||||

|---|---|---|---|---|---|---|---|

|

|

Summary

Most of the changes went really smoothly and quickly. I did have to spend a little bit of time to get familiar with the new navigation and url-parser package, but they are pretty intuitive. I wouldn’t be lying if I said I spent much more time on writing this blog post than doing the upgrade. I also did quite a few changes to the implementation of details I haven’t shown you, just because I’ve become more confident with Elm than I was when writing the previous episodes.

It was quite a bit of changes in terms of LOC’s and I have to be honest and tell you it’t didn’t work once everything compiled. But you can hardly blame Elm for that, it was all my bad. I hadn’t tested the route parsing properly and ended up implementing a loop. Kind of like a redirect loop, but all in js and out of reach for the browser. Firing up the inline repl in Light Table and interactively testing the parser quickly showed me the errors of my ways.

All in all I have to say the upgrade was a really fun and enjoyable ride. I can definately say that 0.17 made the App turn out much nicer.

Hard to say for sure, but my current thinking is to start looking at auth using JWT web tokens. Time will tell if that’s what it’ll be.

Appendix

Unfortunately the 0.17 release left elm-reactor a bit behind in terms of what it supports. From my past experience with ClojureScript, I have gotten used to the feeback loop you get by using the wonderful figwheel. elm-reactor unfortunately doesn’t come close to that currently, so I had to turn to JS land for alternatives. After some evalutation and trials I ended up using elm-hot-loader. It has worked out really nicely even though I ended up pulling down a fair chunk of the npm package repo.

I’m sure elm-reactor will be back with a vengeance in the not so distant future, packing some really cool and unique features.

Elm Maybe - Dealing with null/Nothing

07 April 2016

Tags: elm javascript

TweetIf you have worked with JavaScript (or quite a few other languages that embrace null) I bet you have had one or two errors that can be traced back to an unexpected null reference. Some of them are obvious, but others are really tricky to track down. I’m sure most of you are well aware that quite a few other languages banishes null and introduces a Maybe or Option type to handle nothingness. Elm is one of those languages. Before I started looking at Elm I hadn’t really worked with Maybe types. In this blogpost I thought I’d share a little more insight on how to work with them in Elm. I’ll also briefly cover how they might be (or not) used in JavaScript for reference.

Elm Maybe

| Elm is a statically typed language which compiles down to JavaScript. Types is a core ingredient of Elm, that’s not the case with JavaScript obviously. |

type Maybe a = Just a | NothingThe Maybe type in Elm looks deceivingly simple. And actually it is.

The type is parameterized and the a is a placeholder for a concrete type in your program.

So a here means any type (Int, String, Float etc). A Maybe can have one of two values; either Just some value of type a or it is Nothing.

Where does Just and Nothing come from ? Are they defined somewhere else ? They are part of the type definition, think of them as tags. The name of these "tags"

must start with an upper case letter in Elm.

x = Just 0.0 -- Just 0.0 : Maybe.Maybe Float (1)

y = Nothing -- Nothing : Maybe.Maybe a (2)| 1 | The variable x Maybe with the tag Just and the Float value 0.0 (Maybe lives in a namespace or rather module in Elm called Maybe, that’s why the actual type definitions states Maybe.Maybe) |

| 2 | The variable y becomes a Maybe with the tag Nothing. Nothing has no value, and hence no value type associated. Nothing is Nothing, but it’s still a Maybe though :-) |

Quick detour - Type annotations

Elm is a statically typed language, everything is represented through types. So before we carry on I’d like to briefly cover the concept of type annotations.

Since JavaScript doesn’t have types, I’ll use Java as a comparable example

public int increment(int value) {

return value++;

}

public int add (int x, int y) {

return x + y;

}increment : Int -> Int (1)

increment value =

value + 1

add : Int -> Int -> Int (2)

add x y =

x + y| 1 | The type annotation for increment tells us it is a function which takes an argument of type Int and returns an Int |

| 2 | add takes two arguments of type Int and returns a an Int. So think of the last one as return type. |

Type annotations in Elm are optional, because the compiler is able to infer the types statically. Most people tend to use type annotations because they provide very useful documentation. When working with Elm it’s really something you quickly have to learn, because most documentation will use them and the Elm compiler will most certainly expose you to them.

Getting the actual values from a Maybe

Ok so I have this maybe thing which can be a Just some value or Nothing. But how do I get hold of the value so I can work with it ?

myList : List String (1)

myList = ["First", "Second"] (2)

-- List.head : List a -> Maybe.Maybe a (3)

case List.head myList of (4)

Nothing -> (5)

"So you gave me an empty list!"

Just val -> (6)

val

-- returns "First"| 1 | Type annotation for myList. It is a List of String. It’s just a value, so that’s why there is no arrows in the type annotation |

| 2 | We are using a list literal to define our list. Each list item must be separated by a comma. It’s also worth noting, that every item in the list must be of the same type. You can’t mix Strings with Ints etc. The Elm compiler will yell at you if you try |

| 3 | I’ve added the type annotation for the List.head function. Given a List of values with type a it will return a Maybe of type a. List.head returns the first item of a List. The reason it returns a Maybe is because the List might be empty. |

| 4 | You can think of case as a switch statement on stereoids. Since List.head return a Maybe we have to possible case’s we need to handle |

| 5 | In this instance we can see from the code this case will never happen, we know myList contains items. The Elm compiler is really smart, but not that smart so it doesn’t know the list is empty. |

| 6 | This case unwraps the value in our Just so that we can use it. We just return the value, which would be "First". The value is unwrapped using something called pattern matching. In JavaScript terms you might think of it as destructuring |

The Maybe type is defined in a module called Maybe. In addition to the Maybe type it also includes a collection

of handy functions that makes it handy to work with Maybe types in various scenarios.

myList = ["First", "Second", "Third"]

first = List.head myList

second = List.head (List.drop 1 myList)

tail = List.tail myList -- Just ["Second","Third"] : Maybe (List String)-- Maybe.withDefault : a -> Maybe a -> a (1)

Maybe.withDefault "No val" first -- -> "First" (2)

Maybe.withDefault "No val" (List.head []) -- -> "No val"| 1 | Maybe.withDefault takes a default value of type a a Maybe of type a. It returns the value of the maybe if it has a value (tagged Just) otherwise it returns the provided default value |

| 2 | In the first example first is Just "First" so it unwraps the value and returns that. In the second example there is no value so it returns the provided default |

-- Maybe.map : (a -> b) -> Maybe a -> Maybe b (1)

Maybe.map String.toUpper first -- -> Just "FIRST" (2)

Maybe.map String.toUpper Nothing -- -> Nothing

-- Maybe.map2 (a -> b -> c) -> Maybe a -> Maybe b -> Maybe c (3)

Maybe.map2 (\a b -> a ++ ", " b) first second -- -> Just "First, Second" (4)

Maybe.map2 (\a b -> a ++ ", " b) first Nothing -- -> Nothing

Maybe.map2 (++) first second -- -> Just "First, Second" (5)| 1 | Maybe.map takes a function which has the signature (a → b), that means a function that takes any value of type a and return a value of type b (which can be the same type or a completely different type). The second argument is a Maybe (of type a). The return value is a Maybe of type b. So Maybe.map unwraps the second argument, applies the provided function and wraps the result of that in a Maybe which in turn is returned. |

| 2 | String.toUpper takes a String (a if you like) and returns a String (b if you like). String.toUpper doesn’t understand Maybe values, so to use it on a Maybe value we can use Maybe.map |

| 3 | Maybe.map2 is similar to Maybe.map but the function in the first argument takes two in parameters. In addition to the function param we provide two Maybe values. These two doesn’t need to be of the same type, but happens to be so in our example. There is also map3, map4 etc up to map8 |

| 4 | If any or both of the two Maybe params are Nothing the result will be Nothing. |

| 5 | In the example above we used an anonymous function (lambda). However ++ is actually a function that takes two arguments so we can use that as the function argument |

-- Maybe.andThen Maybe.Maybe a -> (a -> Maybe b) -> Maybe b (1)

Maybe.andThen tail List.head -- -> Just "Second" (2)

tail `Maybe.andThen` List.head -- -> Just "Second" (3)

tail

`Maybe.andThen` List.head

`Maybe.andThen` (\s -> Just (String.toUpper s)) -- -> Just "SECOND" (4)

Just []

`Maybe.andThen` List.head

`Maybe.andThen` (\s -> Just (String.toUpper s)) -- -> Nothing (5)| 1 | Maybe.andThen resembles Maybe.map but there are two vital differences. The function argument comes as the second param (we’ll come back to why), secondly the function in the function argument must return a Maybe rather than a plain value. |

| 2 | The first argument tail is a Maybe, the second argument is List.head which is a function that takes a list as an argument and returns a Maybe, so that conforms to the function params signature required by Maybe.andThen |

| 3 | In this version we use the infix version of andThen (marked by backticks before and after). This is the reason the function argument comes second, so you typically use Maybe.andThen when you you need to work with maybes in a pipeline sort of fashion. |

| 4 | This is an example of piping values when dealing with Maybe values. We start with the tail of our list and then we pick out the head of that list and then we convert the value of that to uppercase |

| 5 | You can almost think of andThen as a callback. If any step of the chain returns Nothing, the chain is terminated and Nothing is returned |

type Perhaps a = Absolutely a | NotSoMuchOf course interop with others will be an issue and Maybe has some advantages being part of the core library. But still

if you really really want to…

JavaScript null/undefined

function headOfList(lst) {

if (lst && lst.length > 0) {

return lst[0];

} else {

// hm... not sure. let's try null

return null;

}

}

function tailOfList(lst) {

if (lst && lst.length > 1) then

return lst.slice(0);

} else {

// hm... not sure. let's try null

return null;

}

}

var myList = ["First", "Second", "Third"];

var first = headOfList(myList); // "First"

var second = headOfList(tailOfLIst(myList)) // "Second"

var tail = tailOfList(lst); // ["First", "Second"]

first // "First"

headOfList([]) // null (1)

first.toUpperCase() // "FIRST"

headOfList([]).toUpperCase() // Type Error: Cannot read property 'toUpperCase' of null (2)

first + ", " + second // "First, Second"

first + ", " + null // "First, null" (3)

headOfList(tail).toUpperCase() // "SECOND"

headOfList([]).toUpperCase() // Type Error: Cannot read property 'toUpperCase' of null (4)| 1 | An empty list obviously doesn’t have a first item. |

| 2 | If this was in a function you might guard against this. But what would you return ? Would you throw a exception ? |

| 3 | Doesn’t look to cool, so you would have to make sure you guarded against this case. Let’s hope you tested that code path, otherwise it’s lurking there waiting to happen ! |

| 4 | Same as 2 |

Okay so most of this cases are pretty silly, we would have to come up with something more real life with functions calling functions calling functions etc. The bottom line is that you have to deal with it, but it’s up to you all the time to make sure nulls or undefined doesn’t sneak in. In most cases there are simple non verbose solutions to deal with them, but it’s also quite easy to miss handling them. If you do it can sometimes be quite a challenge tracking down the root cause.

It’s undoubtably a little more ceremony in Elm, but in return you will not ever get nullpointer exceptions.

Introducing Maybe in JavaScript

If you are from a JavaScript background the blogpost Monads in JavaScript gives you a little hint on how you could implement Maybe in JavaScript.

Let’s borrow some code from there and see how some of the examples above might end up looking

function Just(value) {

this.value = value;

}

Just.prototype.bind = function(transform) {

return transform(this.value);

};

Just.prototype.map = function(transform) {

return new Just(transform(this.value));

};

Just.prototype.toString = function() {

return 'Just(' + this.value + ')';

};

var Nothing = {

bind: function() {

return this;

},

map: function() {

return this;

},

toString: function() {

return 'Nothing';

}

};function listHead(lst) {

return lst && list.length > 0 ? new Just(lst[0]) : Nothing;

}

function listTail() {

return lst && list.length > 1 ? new Just(lst.slice[1]) : Nothing;

}var myList = ["First", "Second", "Third"];

var first = listHead(myList);

var second = listTail(myList).bind(t => listHead(t));

var tail = listTail(myList);

// Similar to Maybe.map in Elm

first.map(a => a.toUpperCase()) // Just {value: "FIRST"} (1)

Nothing.map(a => a.toUpperCase()) // Nothing (object) (2)

// Similar to Maybe.map2 in Elm

first.bind(a => second.map( b => a + ", " + b)) // Just { value: 'First, Second' } (3)

first.bind(a => Nothing.map( b => a + ", " + b)) // Nothing (object)

// Similar to Maybe.andThen in Elm

tail.bind(a => listHead(a)).bind(b => new Just(b.toUpperCase())) // Just { value: 'SECOND' } (4)

new Just([]).bind(a => listHead(a)).bind(b => new Just(b.toUpperCase())) // Nothing (object) (5)| 1 | first is a Just object. Since it has a value the arrow function is run as expected |

| 2 | When the value is Nothing (a Nothing object) toUpperCase is never run and the Nothing object is returned |

| 3 | In the arrow function of bind for first we ignore the unwrapped value and call map on second with a new arrow function which now has both the unwrapped value of both a and b. We concatenate the values and the map function ensures the result is wrapped up in a Just object If you remember the elm case for map2, that was a separate function. Here map is just a convenience to wrap up the innermost value in a Just. |

| 4 | tail is a Just object with the value ["First", "Second"] in the first level arrow function we pick out the head which returns a Just object with the value "Second". In the innermost arrow level function we do upperCase on the value and wrap in it a Just which is the end result. |

| 5 | We are starting with Just with a value of an empty array. In the first level arrow function we try to pick out the head of the list. Since that will return a Nothing object, Nothing passes straight through the second level arrow function, never executing the toUpperCase call. |

So as you can see it is possible to introduce the notion of Maybe in JavaScript. There are several libraries out there to choose from I haven’t really tried any of them. Regardless the issue you’ll be facing is that the other libraries you are using probably won’t be using your representation of Maybe if at all. But hey, maybe it’s better with something than nothing. Or whatever.

Wrapping up

There is clearly a slight cost with explicitly handling nothingness everywhere. In Elm you basically don’t even have a choice. The type system and the compiler will force you into being explcit about cases when you don’t have a value. You can achieve the same as with null but you always have to handle them. In your entire program. The most obvious benefit you get, is that you simply will not get null reference related errors in Elm. When calling any function that accepts Maybe values as input params or return Maybe values you will be made well aware of that. The compiler will let you know, but typically you would also see type annotations stating this fact too. This explicitness is actually quite liberating once you get used to it.

In JavaScript you can try to be more explicit with nulls. You can even reduce the chances of null pointers ever happening by introducing a Maybe/Option like concept. Of course you wouldn’t introduce the possibility of null pointers in your code. However there’s a pretty big chance some bozo,responsible for one of the 59 libs you somehow ended up with from npm, have though.

There are plenty of bigger challenges than null pointer exceptions out there, but if you could avoid them altogether, surely that must a be of some benefit. I’ll round off with the obligatory quote from Tony Hoare as you do when one pays tribute to our belowed null.

I call it my billion-dollar mistake. It was the invention of the null reference in 1965. At that time, I was designing the first comprehensive type system for references in an object oriented language (ALGOL W). My goal was to ensure that all use of references should be absolutely safe, with checking performed automatically by the compiler. But I couldn’t resist the temptation to put in a null reference, simply because it was so easy to implement. This has led to innumerable errors, vulnerabilities, and system crashes, which have probably caused a billion dollars of pain and damage in the last forty years.

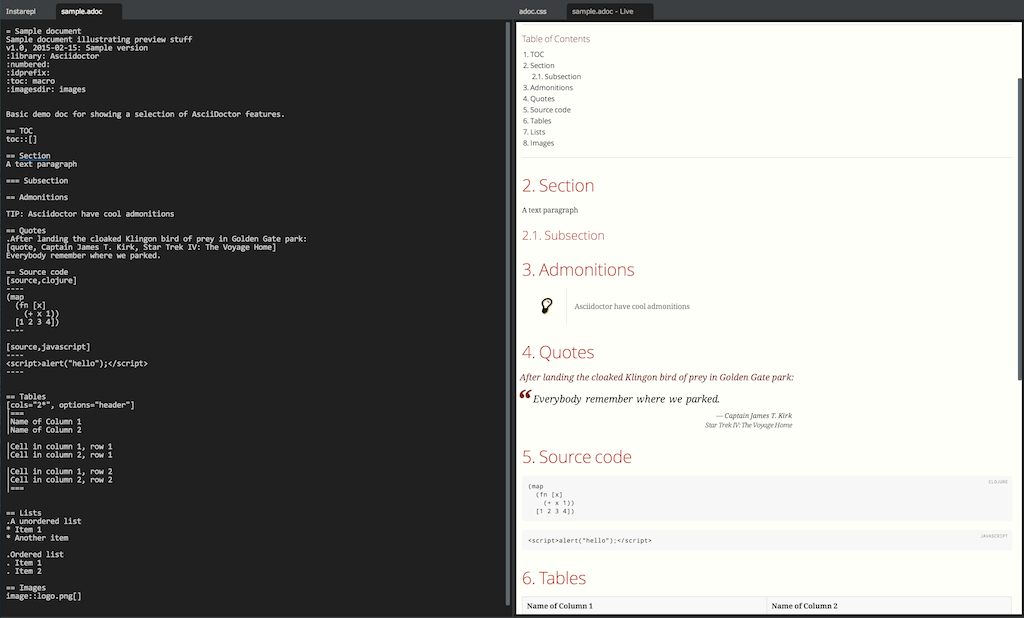

Elm package docs preview in Light Table

28 March 2016

Tags: elm clojurescript lighttable

TweetMaybe you are a package author for Elm packages you wish to publish to http://package.elm-lang.org/ . Or maybe you are thinking about authoring a package. Before you publish something to the package repo you have to write documentation for your package. Wouldn’t it be sweet if you could preview the generated documentation from the comfort of your editor ?

The good news is that with the latest (0.3.6) edition of the elm-light plugin you can !

Demo

-

Preview how the docs will look for each individual module

-

The preview is updated whenever you save your (exposed) Elm module file

-

Layout pretty close to how it will look on http://package.elm-lang.org/ once published

-

Fast (at least on my machine !)

-

Minor detail, but the entire preview UI is also implemented in Elm (ultimate dogfooding). It’s basically a modified and simplified implementation of the package preview code for http://package.elm-lang.org/

Resources

-

https://github.com/elm-lang/package.elm-lang.org for most of the Elm ui code used

-

Check out the plugin: elm-light

Elm and ClojureScript joining forces in the elm-light plugin

14 March 2016

Tags: elm clojurescript lighttable

TweetThe elm-light plugin provides a pretty useful featureset for developing elm applications. Until now all features have been implemented using a combination of ClojureScript and JavaScript. But wouldn’t it be cool if the plugin implemented Elm features using Elm where that’s feasible ? Elm compiles to JavaScript and JavaScript interop in ClojureScript is quite easy so it shouldn’t be that hard really.

If nothing else I thought it would be a fun challenge, so I set forth and decided to implemented a simple module browser for Elm projects.

Elm for the UI